AnthonyFlood.com

Philosophy against Misosophy

The Annie W. Riecker* Memorial Lecture, No. 8, Tucson: University of Arizona Press, 1962, 23 pp.

Posted May 9, 2008

On Sanity in

Thought and Art

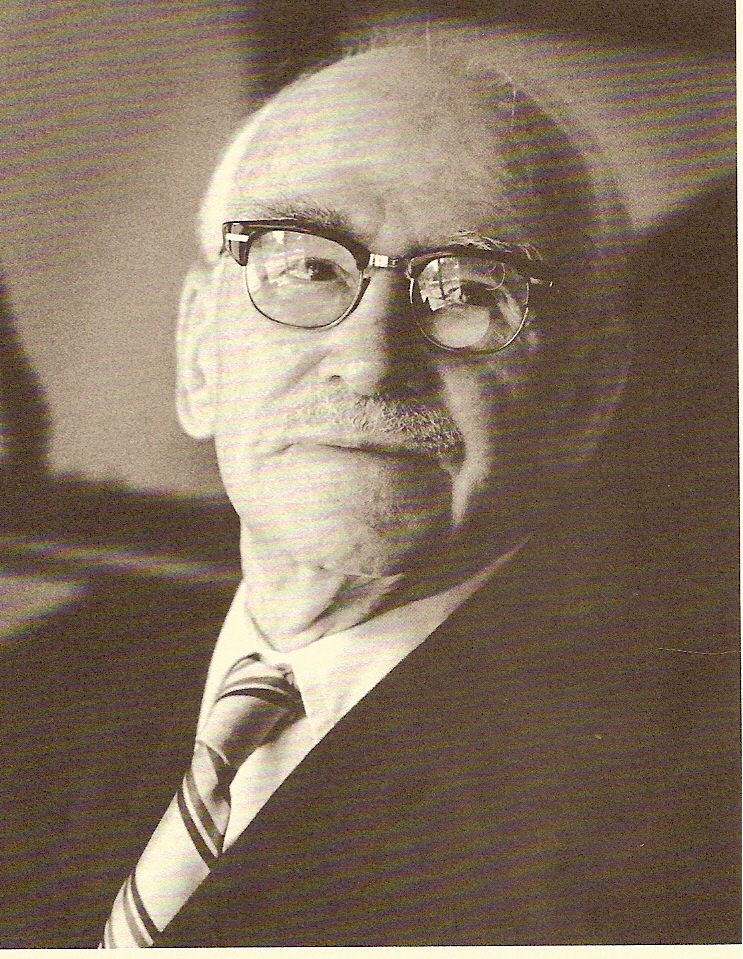

Brand Blanshard

I hope I may begin on a personal note, if I promise not to stay on it. I have now lived long enough to have seen both sides of a great divide. The first world war was a sort of watershed that separates everything on this side of it from the now strange and remote world on the other side. As I look back at that world before the deluge, it seems curiously static—as if fixed in amber and bathed in a gentle afternoon sun. Not that it felt like that to be in it; and no doubt the glow that it now carries is in part merely the illusion memory always throws over the past. But it is not wholly that, I think. At any rate, for those of us who were academics, it had an intellectual serenity and stability that seem to have disappeared under the wreckage of two wars. The philosophers and scientists of those days had their problems, plenty of them, and they knew they had a long way to go; but they were fairly confident that they were on the right track and had only to remain on it to reach their goal; there was a broad straight road leading into the future. In philosophy, in morals, even in criticism, something like a consensus of opinion seemed to be in the making.

Let me recall to your mind first what was happening in philosophy. The time just before the first war was a rationalistic heyday. There is a picture extant of James on his New Hampshire farm wagging a finger at Royce and saying “Damn the Absolute,” but Royce is smiling serenely, as well he might; for he knew that the pundits of philosophy were on his side and that James was looked on as a maverick and an amateur. In Europe the story was similar. There Bergson was the maverick, and the German system-builders dominated the scene. When as a wandering student, travel-ling of course without a passport, I went to Berlin in the winter of 1913, it was Paulsen I wanted to hear, and though when I asked the porter at the gate where Herr Paulsen was lecturing, the answer was “Ach, er ist todt, er ist todt,” I found that Wundt was still lecturing at Leipzig, and Windelband at Heidelberg, and I went to hear them both. They were of course tremendous scholars, but they were more than that. Even a naive youngster could feel in them, as their applauding students did, a passion for reason, a passion for articulating in intelligible fashion the great mass of detail that their historical and social studies had accumulated, a conviction that, however dense the difficulties, reason could drive a Roman road through them if it would.

Across the channel in England, James Ward was still lecturing at Cambridge, and Bradley at Oxford was still brooding in his rooms overlooking the Christ Church meadows. I sought them both out, as only a brash young American would; and being a student at Bradley’s college of Merton with rooms a few steps away, I had an excuse for seeing something of him. It is hard to convey to persons of another generation what an aura his name carried in those days. He never appeared at meetings; he never lectured or taught; many of the philosophy dons at Oxford had never so much as seen him; but they were all aware of him and afraid of him; there was a sort of electric field around the den where the old lion was holed up. He was formidable not only because he wielded a slashing dialectic and a powerful pen, but also because he was in such deadly earnest about his thought; it was his religion and his life.

Bradley’s conception of philosophy was the dominant conception of his time, but in essence it was not new; it had come, with some fresh flourishes, from Plato, Spinoza, and Hegel. The business of philosophy was to understand the world. The best way to do that was to start with the postulate that the world is intelligible in the minimal sense, and then go on to show how far it is intelligible in the maximal sense. The minimal sense is that the real is not self-contradictory. Bradley started with that as his test of truth and reality; what failed to pass it must be thrown out; and very little in religion, science or common belief did manage to pass it. The maximal sense is that the world is an interconnected system of parts, in which everything is connected with everything else as necessarily as in geometry. The aim of thought from first to last is at system; understanding means placing things in a system—at first in a little one, as when a child grasps the function of the chain on his bicycle, later in a larger one, as when he sees why democracy must place limits on freedom, ultimately in a complete one, in which nothing is left out. Thought is an activity governed by an immanent end. That end has two aspects. One of these is completeness, the embracing of all things within our knowledge. The other is order, the grasp of that logical interdepen-dence which all things have on each other if the world is really intelligible.

This view made provincialism in philosophy almost a contradiction in terms. To test any piece of your knowledge, you had to go beyond it, then beyond the wider context to one still wider, like the ripples from a pebble dropped in a pool. Certainty lay at the end of an infinite expansion. Here Bradley the absolutist was in effect a relativist, while the traditional empiricist and rationalist were both by comparison absolutists, since both held that one could achieve certainty almost at the beginning of the process. The empiricist held that the judgment “that is my friend, Jones,” could be known to be certainly true by its correspondence with given fact, while Bradley said it could be so known only by its coherence with further perceptual judgments about Jones’ walk, dress, and habits, and these only by further judgments, which must stand or fall as a whole. The rationalist held, with Descartes, that no system could have more certainty than the axioms with which it started; Bradley held that even the axioms of mathematics were statements about the real, and must be tested, like other judgments, by whether they comported with our experience as a whole; the proof of the laws of identity and contradiction was merely that their denials would render any system impossible. Neither in knowledge nor in the universe are there islands any more. Science, history, and religion are all dependent on metaphysics for the testing of their assumptions and the synthesis of their results; metaphysics is in turn dependent on them for the material that it works with. Common sense, science, and philosophy are merely segments in one continuous effort of understanding, which is an effort to make everything intelligible through seeing its place in the system of things. The man who suffers from an intellectual cyst, that is, from any belief which resists criticism from his experience as whole, is in that degree less than sane. When we start talking with a man in an institution, he may seem a very sensible fellow until he suddenly remarks, “I see I must set you right on one matter, you know I am really Napoleon.” You may point out to him a thousand things that are inconsistent with his conviction; it is to no purpose; and if you corner him, he may be dangerous. His insanity lies in a loss of perspective regarding one of his beliefs. He is not wholly irrational; but neither is any of us rational altogether. Sanity, rationality is a matter of degree.

Bradley’s philosophy made sanity in this large sense the end and criterion in the sphere of thought. There was a sense in which the ethics of those days was an attempt to introduce the same idea into the sphere of practice. In ethics Bradley and Green were neo-Aristotelians; for them the end of the practical life was the balanced realization of one’s powers. In my student days this view was under attack by Rashdall and others as egoistic, and the prevailing ethics of the day was ideal utilitarianism, which held that our duty was so to act as to produce the greatest total good of all concerned. This good was understood to consist of results in the way of experience, for only experiences were intrin-sically good or bad; books and flowers and pictures were not good in themselves; it was our experiences of them that were good. But then what makes an experience good? Moore and Rashdall wrestled with this problem and came out with an answer that seemed con-vincing at the time, but has not stood up well under criticism. They said that what made an experience good was a special quality of goodness, not a natural or observable quality like squareness or greenness, not even a quality that could be analyzed or defined, but a quality nevertheless, which could be seen to inhere necessarily in such states of mind as knowledge and happiness.

Leonard Hobhouse, that admirable but neglected thinker, here said no. The goodness of an experience was not a non-natural wraith of this kind; it was something altogether natural; it depended on and indeed consisted in its satisfying human impulse. When some-one expostulated with McTaggart for not throwing his cat Pushkin out of the philoso-pher’s own chair before the fire, McTaggart replied that he was quite happy where he was because he could think about the universe, while poor Pushkin could not. Metaphysics was a good for McTaggart, because it satisfied a faculty and thirst of his nature; it was not a good for the cat, because behind its narrower brow neither faculty nor thirst was there.

In his masterly book, The Rational Good, Hobhouse accepts in the main the ideal utilitarianism of Rashdall and Moore, but says that the good by whose production we are to judge the rightness of our conduct is the greatest general self-realization. The fulfill-ment of any drive or urge of human nature is pro tanto good; it is to be repressed only when its fulfillment, by inhibiting other fulfillments, would mean less fulfillment on the whole. The conflicts between the goods of different persons are dealt with in the same way. Thus Hobhouse brought into ethics a principle that corresponded to Bradley’s prinnciple in knowledge—what I have called the principle of sanity. The aim of thought was at breadth and harmony of judgment; the aim of practice was at breadth and harmony in the realization of impulse. Fanaticism, arbitrari-ness, self-will, asceticism, the repression of any impulse or any man except in the interest of fuller expression on the whole, was ruled out as unreasonable. Mens sana in corpore sano, was the ideal for both the individual and the body politic.

Hobhouse, like Rashdall was a mind of remarkable range, but neither of them so far as I know, ever wrote on aesthetics. A widespread interest in the theory of criticism, however, was an inheritance from Victorian days. When I made my first comment on the world, “with no language but a cry,” the author of that phrase, Tennyson, was the most admired of poets, and Matthew Arnold, then only four years dead, was the most influential critic. Arnold detested wilfulness and triviality in literature, and was sure there was an objective better and worse, whether he found it or not. The anthropologists were reminding men, as Chesterton noted, that

The wildest dreams of Kew are the facts of Khatmandu

The crimes of Clapham chaste in Martaban;

and Kipling chimed in with the artistic parallel:

There are nine and fifty ways of constructing tribal lays,

And every single one of them is right.

The Victorians and Edwardians of course did not believe that morals were merely a matter of taste, and they did not want to believe that matters of taste were either, if that meant de gustibus non disputandum, and that one lay or lyric or landscape was as good as another.

But if there really was an objective better and worse in art, what was the standard to be? A weighty and timely answer came from that cradle of artists, Italy, and from Italy’s great philosopher, Croce. Croce answered that the standard was expressiveness. Any-thing was a work of art so far as it succeeded in expressing what it sought to express, and a painting or a sonnet or a single cry that expressed the artist’s intuition perfectly must be conceded to be a perfect work of art. When this doctrine reached Oxford, it caused excitement and also murmurs. It was strange doctrine to come from an idealist brought up on Hegel, for it implied that the content of a work of art was of no artistic importance; all that mattered was whether the content, such as it was, was well expressed. Bosanquet, who had written a history of aesthetics, presented an indignant refutation to the Bri-tish Academy. Andrew Bradley, the philoso-pher’s brother, who was professor of poetry at Oxford, raised the issue in one of his lectures by asking whether one could write as great a poem on a pinhead as on the fall of man, and concluded that one could not. Here he had all the idealists behind him and probably most of the critics. The Anglo-Saxon tradition has never run to the narrower forms of art for art’s sake. Ruskin, who could not endure Whistler, thought that art should reflect all man’s aspirations; Arnold felt as strongly as Eliot does that a poet should write with the whole Western tradition in his blood; the poets in In Memoriam had voiced the misgivings of faith about science; Browning was presented at book length by Sir Henry Jones, the Glasgow philosopher, as a philosophical and religious teacher. When Bosanquet gave his Gifford lectures shortly before the war, he urged that the principle of individuality, which was just Bradley’s principle of breadth and harmony again, was a measure of goodness and beauty as well as truth. “We adhere,” he wrote, “to Plato’s conclusion that objects of our likings possess as much satisfactoriness—which we identify with value—as they possess of reality and trueness.” Art itself is subject to the principle of sanity.

This was the sort of doctrine we were brought up on in those antediluvian days. Perhaps we were a little intoxicated by it, for it inclined us to think of history as a triumphant advance of reason in which freedom broadened “slowly down from precedent to precedent,” and would go on doing so. We were brutally awakened from our dream. Suddenly all the dams of civilization seemed to break at once. I was a bit of flotsam caught by the flood in Germany and washed up a few weeks later on British shores. Britain of course was tragically unprepared, and I remember wondering how those ragged squads drilling in London squares could meet the massed professionals across the Channel. The universities sent their young men in shoals into French trenches, from which the best of them did not return. There was a moratorium on speculative philosophy. It seemed remote now, and people did not fail to remark that it had been made in Germany. Royce in America died denouncing the Germany at whose feet he had sat so long. In England Hobhouse dedicated a book to his lost son in which he bitterly attacked Bosanquet’s theory of the state. The distinguished idealist, Haldane, who had reorganized Britain’s army for her, was excluded from the cabinet by the pressure of public opinion. When the universities reconvened after the war, the old philosophy was on the wane, and new voices began to be heard. A queer gospel, entitled Tractatus Logico-Philosophicus, arranged in numbered verses and prophetizing the end of philosophy as heretofore conceived, appeared in Cambridge in 1922. The same gospel translated now into intelligible English by a youth in his twenties named Ayer, appeared some years later in Oxford under the title of Language, Truth, and Logic. As the chairs in philosophy fell vacant, they were filled by a new breed of men. There is not, I think, one chair in England, Scotland or Wales, and there are very few in America, still occupied by men of the older persuasion. One wonders if there has ever been so swift a revolution in philosophy. On the continent also something like a revolution has occurred, though here the Jacobins were not analysts but existentialists.

I began by saying that I had seen both sides of a divide in the history of philosophy. I now want to look at the hither side through glasses I brought with me from the farther side and could never quite get myself to discard. There is no time at the moment to test these glasses for their amount of distortion; but I must remind you that I am wearing glasses, that they certainly do distort in some measure, and that you would do well to check what I say with your younger and unspectacled eyes.

The philosophy that has now dispossessed that of Green and Bradley, Ward and McTaggart, even in their old haunts, is linguistic analysis. It came into general notice a year or two ago when it was attacked in the columns of the London Times by Lord Russell, and vigorously defended by its Oxford supporters. That it is of interest also to Americans is shown by the fact that the New Yorker allowed an article on it by a young Indian, Ved Mehta, to trickle along through a hundred pages of advertisements; any philosophy to which the New Yorker will devote a hundred pages has arrived. What is this new philosophy trying to tell us?

Unfortunately, that is very hard to say, since its advocates are averse to stating in general terms what they are doing; others have to do this for them. If I had to put the new philosophy briefly, I should say that it is an attempt to read G. E. Moore in terms of the later Wittgenstein. Let me try to explain. In 1926 Moore, in a state of reaction against metaphysics, wrote a famous essay called “A Defence of Common Sense.” In that essay he took the view that common sense was the test of philosophy rather than the other way round. Philosophers have often titillated their hearers by denying the existence of space, time, matter, other selves, and their own past, and while doing so have covered much paper spread out before them, have taken many hours by the clock to do it, have written with solidly material pens, have kept in mind throughout the prejudices of their readers, and have relied on their memory as to what these prejudices were. Moore thought there was something queer about this. He was far more certain, he said, that the things the philosophers were denying were true than that any of the arguments they offered against these things were sound. Does not that clearly show that common sense and not philosophy is the court of last appeal? He thought it did.

The linguistic philosophers accepted this argument of Moore’s, but considered that he had not quite understood what made it so strong. It was hardly plausible to say that the plain man was somehow a philosopher who could outthink the professionals at their own game. The strength of common sense lay rather in its language. Language has so developed as to fit like a glove the vast variety of our feelings, desires, commands, intentions, and perceptions; in its ordinary uses it expresses or reports experiences we have all had at first hand; and such reports it is idle to deny. To tell the plain man that there are no material things is to tell him that the phrase “material thing” has no correct application and this is absurd, for all he needs to do to refute it is what Moore did before an amused, if also bemused British Academy, namely to raise his two hands and say, “Here, these are examples of what I mean by material things, so of course they exist.” Can the philosopher really mean to deny this? If he does, we can only tell him to his moon-like face that he is talking nonsense. It is more charitable to surmise that he is really proposing to use old words in a new way, which, having no authority in accepted usage, is just a queer way. When Berkeley denied the existence of matter, he was not trying to banish what you and I mean by tables and chairs; he was proposing to use the word for something invisible and intangible—which was permissible but somewhat silly. When Bradley denied the existence of space and time, he could hardly deny that it had taken him a minute or two to spread his last sentence across the page; he was merely using the words “space” and “time” of certain ethereal webs woven by himself. Surely it is much safer to stick to the common usage of common sense.

Once we see the strength of ordinary usage, we can also see the weakness of philosophy. Philosophic problems arise just because we have been tempted into misusing words, and we should deal with them not by trying to solve them, which would be taking them too seriously, but by a sort of Freudian therapy—by seeing how they arose and thus dissolving them. Philosophers when they have left the straight road of common usage, have proved amazingly gullible. The whole Platonic philosophy, for example, is based on a verbal confusion. Plato saw that the members of a class were alike; this suggested that they had something in common, as a class of students have when they have the same teacher; this common something was named by a noun, and since nouns usually name things, it was taken as a thing. Thus universals came to be regarded as existents. But there are no such existents; there are only things resembling each other. With this simple insight the Platonic world of ideas comes falling down like London Bridge. Again people have seen other people laughing, crying, and talking. What makes them do these things is hidden from sight, but is called “he” or “she,” or a “mind,” and again it is assumed that these are names for an invisible and intangible substance, a “ghost in the machine,” whereas all that is really there is a set of capacities or dispositions for behaving in certain ways. And once the ghost is established there, the question arises how it can act on the machine or be acted on by it, and we have the old problem of the relation of mind and body. But the problem is wholly unreal, for there is no ghost in the machine; all that has been there from the beginning is a set of capacities or dispositions for behaving in certain ways. All these metaphysical puzzles are needless because they arise from avoidable misuses of words. We should cease to be troubled by them if we were willing to do two things, first, use words with their standard meanings; second, reflect, when such a puzzle does arise, that it must have sprung from some category mistake, that is from the application in one field of a use appropriate only in another.

There is much more, of course, in linguistic philosophy than this thesis about ordinary use, but this remains its most distinctive thesis. What is one to say about it who has been brought up in the older tradition of philosophy? It is sometimes thought to be a return to common sense. I must confess that the only common sense that seems to me authoritative in philosophy is that massive common sense which consists in the attempt to see things steadily and whole, and which I have described as philosophic sanity. Judged by this sort of common sense, that of the linguistic philosophers is a wraith that melts away when looked at. There are several reasons for saying so. For one thing, the notion that ordinary usage is infallible is nothing but a tiresome myth. Anyone who believes it must, as Russell points out, abandon his belief in physics. The plain man gazes up at the night sky and says “Look, there is an exploding star.” What he means by this impeccable English is that what he sees is happening out there now. This cannot be right if the physicist says it is not happening, that the event he thinks he is seeing was over, perhaps, years ago. Again, it is perfectly good usage to say that in the next room there is a table not now observed by anyone, that is brown, smooth, and hard. Berkeley would say that this is not so; and whether one agrees with him or not (I do in fact), he is surely not to be refuted by saying that he is confused about words. He knew what the plain man meant and he declined to accept it, saying he was quite ready to speak with the vulgar if he was allowed to think with the learned.

Secondly, to confine philosophy to the sort of question to which ordinary meanings supply the answer is to impose a crippling censorship on our thought. I have heard an eminent linguistic philosopher settle the problem of free will by saying that the statement, “I can push this inkstand” is good usage; it therefore has a clear application; I therefore can push the inkstand, which means that I am free to do it. Now, if “can” means here, as he took it to mean, merely that I am not prevented from doing it by any external restraint, the statement is true enough. But then it has nothing whatever to do with the real issue of free will, which the plain man has probably never thought of. That issue is not whether his volition would produce its normal effect, but whether there is any cause constraining his choice, and to rule this problem out because it does not use “free” in the ordinary sense is obscurantism.

Thirdly, is it true that philosophical problems arise out of verbal confusions? Some of them no doubt do. But some that are alleged to have done so have surely not done so in fact. It is hard to believe that Plato, or any other self-critical mind has been so hypnotized by nouns as to think that they had to stand for substantives. And whoever says that men owe to verbal confusions their long wrestling with the problems whether God exists and is good, whether thought and feeling survive death, whether there is a trustworthy test for right conduct, whether beliefs are made true by good consequences, seems to me not to have entered into these problems at all. Philosophy springs straight out of human life, out of such longings as Descartes’s for certainty, or, Spinoza’s for a better way to live, or even Bradley’s for a way of experiencing Deity. As the great philosophers have conceived it, even as it has been conceived by those few such as Hume and Moore, whom the linguistic sect admires, it called for breadth of understanding and imagination. One can extract from metaphysics or theology a set of verbal puzzles if one wants to. But it is a new kind of philistinism to insist that this is all there is to them—a philistinism of which minds governed by the principle of sanity would have been incapable.

We have already suggested that philosophy after the war came to a fork in the road. British philosophy, followed largely by American, took the road of analysis; European philosophy took that of existentialism. What is existentialism? It is a reaction against what it conceives as an excessive reliance on reason, but apart from this and such minor statements as that all existentialists tend to be gloomy and to write badly, it is hard to say anything that holds of all of them alike. Some of them are atheists like Sartre, some of them theists like Marcel; some of them, like Heidegger, have offered heils to Hitler; others, like Sartre, three cheers for Stalin; for some of them, the dominant mood seems to be anxiety, for others nausea. It is unsafe to talk in general terms about a movement as amorphous as this, so let us focus our brief comment on the father Abraham of the tribe, that great Dane, Soren Kierkegaard.

Regarding reason, Kierkegaard held that it breaks down at two vital points. It cannot deal with existence and it cannot deal with God. It cannot deal with existence because it is at home only among essences, and existence is prior to essence. This sounds mysterious, but it is perhaps less so than it seems. If you look at an apple, you perceive something round, red, and smooth. These are qualities, characters, or essences, that is, contents that can be perceived or thought about. Does the apple reduce without remainder to a set of essences of this kind, or is there something about it that is over and above these? You can easily test that, existentialists would say. All these characters might appear in imagination, but that would not give you a real apple; for that, the characters must exist in space. Now existence is not just another character; it is that which, when added to characters makes them real, while not being a character itself. And since it is not a character—not a quality or relation or any set of these—it cannot be perceived or thought. The same holds of a self. Like an apple it too has a content, a set of conscious percepts, concepts, and so on; but the content of its consciousness does not exhaust the self, for that content happens to exist in time, which is surely the most important thing about it. And this existence is something that thought can never seize. It is real, but inexplicable and unintelligible.

This is perhaps the most important single point in existentialism. Is it valid? The rationalist finds it unconvincing for two reasons. First, an entity that is neither a character nor a relation nor a set of these, an “it” of which the question “What is it?” must forever be irrelevant, a something that is nothing in particular, sounds uncommonly like nothing at all. Secondly, the existentialist forgets that there is an alternative view. One may say, as Montague did, that what distinguishes the imaginary from the real apple is its appearance in time and space. But since spatial and temporal relations are themselves characters, we seem not to need existence in any other sense. It may be objected that real apples and their relations are vivid and distinct in a way that ideal ones are not; but this is irrelevant, for vividness and distinctness are themselves characters again. To be sure Bradley himself wavered on this point; in the famous passage of almost Kierkegaardian revolt against Hegel in which he attacked “the unearthly ballet of bloodless categories”—the only passage from a work on logic to reach the Oxford Book of English Prose—Bradley confessed that a world of universals seemed to him very dreary and ghostly. But Bosanquet asked him what precisely he wanted beyond these universals, and since he was unable to answer, he withdrew from his brief incursion into existentialism. They both ended by saying that you will never reach the unique in an individual by peeling off its attributes and relations, since it will turn out, for all your troubles, as coreless as an onion; what you must do is just the opposite, namely include more and more of those relations that alone can specify it into uniqueness. Pure being, as Hegel contended, is hardly distinguishable from nothing, and in pure existence, if you ever reached it, you would have left even that wisp of content behind.

Suppose you were to admit that existence somehow exists; even so you have to deal with it in terms of content. Heidegger, to be sure, gave his profound inaugural address at Freiburg on Nothing, and he bas even held that this Nothing goes nothinging about in alarming fashion. But when existentialists condescend to cases, it always seems to be some humble concrete thing, myself or this apple, that is doing the acting, and doing what it does because of what it is. And we may as well let them worship at their strangely empty shrine so long as, when they emerge from it, they talk and act like the rest of us.

I said, however, that Kierkegaard’s existentialism had another side. It is this side that is most familiar, for it has been appropriated with showers of blessing by theologians in need of a philosophical imprimatur. Kierkegaard was brought up in a household where theological discussion was the order of the day, and by a father to whom revealed religion was the most tremendous of realities. After living for long in a hothouse, young Kierkegaard went to Berlin and studied Hegel. He became a sceptic, but soon returned to his father and the sunless religion of the big dark house in Copenhagen. But no one comes back from Hegel quite the same. Kierkegaard saw that if the men of faith were to save their faith from Hegel, if they were to stay his “new conquering empire of light and reason,” they must make a clean break with reason; otherwise the rationalists would use the paradoxes of religion as a Trojan horse to invade and destroy it. There is no use in fighting fire with fire; better fight it with water; quench reason with faith. Admit frankly that faith is as full of paradoxes as the critics say it is, and then answer that it does not in the least matter, that on the level where faith moves, the laws of logic and ethics are transcended so that to demand intelligibility in religion is impious, an attempt to imprison Deity in the little cage of our own understanding.

This doctrine has become epidemic in our theological schools, which shows that there is something in it answering to a need. Faith is threatened again by reason, this time by the rising tide of scientific knowledge which is secularizing the world. But do we have here a satisfactory defense of faith? I cannot think so, for it carries too much that is inimical to both faith and knowledge. It undermines knowledge, because if the contradictions in which Kierkegaard revels can really be true, then logic itself is no safe guide; and if logic goes, then nothing can be depended on in the whole range of our “knowledge.” If what reason warrants as true is invalid in theology, then reason as such is a false guide. The doctrine is likely also to be fatal to faith. For if faith can tell us, as it did to Abraham, in that legend so beloved of Kierkegaard for its rebuke to our moral certainties, that what conscience and reason condemn may nevertheless be our duty, then how are we to distinguish the inspiration of the prophet from that of fanatic or charlatan? Reason may be a poor thing, but it is the best we have, and the theology that discards it may live to rue its recklessness. If it succeeds in persuading men that their reason and their religion are really in conflict with each other, there can be little doubt which in the long run will come out second best. It will not be reason.

We have been remarking on new developments in philosophy and religion; has anything important been happening mean-while in the field of ethics? Fifty years ago moralists seemed to be converging toward a rough consensus. There was an objective and universal standard of conduct. Actions were to be appraised through their consequences, and what was important among these consequences was the fulfilment of human faculty. At any rate, Paulsen in Germany, Hobhouse and Bradley in Britain, and Dewey in America, were so far in tune, though each with grace-notes of his own. Hobhouse’s panorama of “morals in evolution” showed the advance of morals as a steady increase in the control and ordering of impulse by reason, and he thought that the advance showed an accelerating spread. But storms were brewing as he wrote. Since then there have been at least four major waves of calamity for this old position, which have all but overwhelmed it and its high hopes.

The first of these, like logical positivism, was stirred up in Vienna. A courageous student of the oddities of human behavior, named Freud, trying to explain such things as slips of the tongue, dreams, and sudden rages, concluded that to explain them plausibly, we must push the boundaries of mind far down into the unconscious. The more he probed into this sub-cellar of the mind, the more convinced he became that this was the essential part of it, that it was filled with unruly denizens, and that the respectable dwellers upstairs were scarcely more than puppets of the unruly mob below. Our attempts to justify our conduct are less reasons than rationalizations, that is, self-deceptions designed to make what this submerged crew wants us to do look like what we ought to do. Just below the polished floor on which the chairman of the board of deacons walks about in such seemly fashion lies a set of cave men, held in precarious check.

To many this theory itself seemed to be a product of irrational misanthropy. But then came the second and most terrible of the waves, a double and tidal wave of two world wars, and they invested the cave-man theory with a horrible plausibility. We had read of Attila and Jenghis Khan, but these monsters seemed buried safely in the dark ages. We never anticipated their resurrection in the Himmlers and Eichmanns of the most scholarly nation in Europe. Nor was the moral breakdown confined to these men and their henchmen. In talking recently with a woman who had escaped a concentration camp, I learned what I had not quite realized before, that for much of their worst work the jailors could rely upon volunteers among the prisoners themselves, who for a bit of extra food or more tolerable quarters were ready to sell out their comrades. Some of these were apparently kindly people who broke sickeningly under the strain. Many of us must have asked ourselves whether we, or any of our respectable kind were quite safe against the atavism of the Neanderthal “id” within us.

A third though milder wave of dismay was stirred up by the anthropologists. Boas, Malinowski, and Westermarck collected an immense mass of facts appearing to show that in no major relation between human beings, between parent and child, for example, or man and woman, was there any stable or common pattern of conduct. Popular books by Ruth Benedict and Margaret Mead were soon disseminating the notions that right and wrong were relative to cultures, and that talk of objective standards in ethics was naive and provincial. Many youths who had served in France or Japan or the South Seas were able to verify this variability for themselves. In our sociology departments the relativity of morals became almost a truism.

Few of these anthropologists were trained philosophers, and for a time it was easy to answer that they were going beyond their brief, that no description of what is can settle the question what ought to be. But here came the fourth wave, which supplied the anthropologists with support from an unexpected quarter. It was the rise of a subtle philosophical school which undertook to offer proof that objective standards were meaningless. According to this new school, the statement that something was good, or some action right, was not strictly a statement at all; it was an exclamation, an expression of feeling, a cry of attraction or repulsion like “Cheers!” or “shame!” If anyone did so exclaim, you could hardly say “Yes, quite so,” for in what he said, there was nothing true or false; furthermore an exclamation can be neither argued for nor refuted. All value judgments were thus removed at one stroke from the sphere of the rational. The old idea that the good life was the rational life, or indeed that any conduct was more rational than any other, was a pleasing but rather self-righteous illusion. Lord Russell, who was converted to this way of thinking in middle life, confessed that he did not like it, but added that he liked alternative theories still less.

What are we to say of this massive drift toward ethical scepticism? One cannot dismiss these theories as merely false, for there is truth in each of them. Nevertheless, they do seem to me, one and all, to be at odds with what I have called the principle of sanity in thought. They are all generalizations from too narrow a base, which when worked out into their implications, collapse into incoherence.

Many people think of Freud as the great anti-Aristotle who has shown that man is an irrational animal and some of them are carrying over into philosophy Freud’s method in religion. Just as the plain man’s belief in God is a father-substitute, Bradley’s belief in an Absolute is a passionate reaching out for security; even the idealism of the happy and healthy Berkeley arose out of infantile abnormalities connected with sex and excretion; and indeed it would appear that all theorizing is governed by non-rational pulls and pushes. Now no one can really hold to this consistently. No one supposes that beliefs in mathematics and physics are the puppets of passion; we know that even in psychology some men can stick to the evidence better than others; we know that unless they could, Freud, who was clearly one of them, would have no claim to be heard. It has often been pointed out that if all theory is the product of non-rational desires, then so is the Freudian theory, and in that case why should we believe it? The Freudian who believes his belief that men are irrational to have been arrived at rationally, is rejecting his own theory, for he believes that we can so far be rational after all. And then have we not returned to Plato and Bosanquet with their heartening insistence that men are rational, though imperfectly and brokenly so.

Is not this the answer also to talk about war revealing the cave-man in us all? Many men did break with their ideals and principles under the terrible strain of war, but some did not; one thinks, for example, of the heroic German pastor, Dietrich Bonhoeffer. But what if it were proved that under physical duress we all have our breaking-point, what would that prove? Not surely that we are cave-men in disguise, but rather that our powers of normal thought and feeling are conditioned by our frail bodies, and we know that tragic fact before. To say that a man is really or essentially what he is under extremes of misery or pain is to write him down unfairly.

May I confess that I remain skeptical, too, not of the facts that the anthropologists have supplied us, but of the conclusion commonly drawn from these facts? What they show is that men differ widely—if you will, wildly—in their moral practices. They do not show, I suggest, that men are fundamentally different in their moral standards, because that would mean a fundamental difference in their scale of intrinsic values, and this seems not to have been made out. To show that the Aztecs resorted to human sacrifices is not to show that they approved of murder, or that their ends in life were basically different from ours; it may only show that they lived under religious delusions. If we held the unhappy belief that only by placating an angry deity with an annual sacrifice could the crops be raised on which the lives of all depended, is it so certain that we should act differently ourselves? When one comes to ends instead of means, the great intrinsic goods to which life is directed, do we not find that men agree fairly closely the world over? We have yet to come on any corner of the Sahara or Antarctica where men prefer pain to pleasure, ignorance of the world to knowledge of it, fear to security, filth and ugliness to beauty, hatred to friendship, privation to food and drink and comfort. So far as one can see, the Russians and the Chinese want very much what we do; we differ from them not fundamentally, about ends, but practically about the most effective means of reaching them. Indeed behind the bitterest debates in the United Nations about colonialism and injustice you find this very assumption that the needs and goods of human nature are everywhere the same; that is what makes the debate possible; the have-nots of the world are saying in chorus, “what we want is what you want, and largely have; now help us to get it too.”

But according to our emotivist colleagues, it makes no sense to talk about what is objectively good, or good for all men. To say that anything is good is to express how I feel about it. When Mr. Zorin and Mr. Stevenson both say that oppression in Angola is bad, they are expressing no common belief, and when Mr. Zorin says that Russian control in Hungary is good and Mr. Stevenson that it is bad, they are not differing in belief. Such “judgments” are exclamations. Now this is the sort of theory that would have occurred only to a philosopher, and can be retained, I suspect, only by the philosophers described by Broad as “clever-sillies.” I do not think it can be consistently believed or lived by. It implies, for example, not only that we never agree or differ in opinion on a moral issue but also that we never make a mistake on any such issue, that nothing in the past was either good or bad where it occurred, that objective progress is meaningless, that no one can show by evidence that any moral position is sound, or even adduce any relevant evidence for it, that approval is never more appropriate than the opposite, since there is nothing good or bad in the object at all, that personal or national differences over moral issues are incapable, even in theory, of rational solution. I cannot stay to argue these points, much as I should like to. I can only say that I do not think that Russell or Ayer of Carnap has dealt with them convincingly, and that until someone does, I shall continue to believe that there are objective moral standards binding all men, and open to study by a common reason.

May I turn now to the last field mentioned at the outset, that of criticism? Those of us who began our work before the flood had hopes that even in such treacherous fields as music and painting and poetry, some sort of standard of judgment was emerging. Not that we wanted all artists to be alike, which would be a bleak consummation, even east of the Berlin wall; but that there might be some consensus as to what made a work of art worth while. Need I say that in this field the confusion is worse confounded, if that is possible, than in the field of ethics? What has happened?

What has happened is the gradual extrusion of meaning from art as something aesthetically irrelevant. Until far on in the Victorian period, painters were still working under the influence of a theory as old as Aristotle and sturdily defended by Sir Joshua Reynolds, that a painter should concern himself not with anything his eye happened to light on, but only with things that had some meaning beyond themselves. By preference this meaning would be universal, that is, would interpret some central human experience and have something to say to all men. Thus Reynolds in his own Age of Innocence was trying to catch the spirit of childhood; just as Raphael in the Sistine Madonna was trying to record for us the rapt joy of motherhood. Now this, said the later painters of the nineteenth century may be philosophy or psychology, but it is not art, and they set about to recapture what the eye actually saw, as distinct from what the mind imported into it. This was the period of the impressionists, of Pissarro, Monet, and Renoir. The next step was an insistence that within the crude mass of what the eye sees, there must be a singling out of satisfying form; so post-impressionists like Cezanne arranged their lines and planes into pleasing over-all designs. Hard on their heels, however, came the cubists, who pointed out to them that they were still painting figures of clowns and ballet girls; but if it was really form that counted, were not these clowns and ballet girls expendable? Roger Fry thought they were. He records how he once saw a signboard done by Chardin for a druggist’s shop, representing a set of glass bottles and retorts; and he says, “just the shapes of those bottles and their mutual relations gave me the feeling of something immensely grand and impressive, and the phrase that came into my mind was, “this is just how I felt when I first saw Michaelangelo’s frescoes in the Sistine Chapel.” But if it was the shapes and their relations that did this for him, why were even bottles necessary? So he and many others moved on into abstract art, the art of Kandinsky and Mondrian. Was this the end of the line? No. Both these latter artists conceived themselves as realists; they were students of nature, who sought to find there forms they could use. “But why should we follow nature at all?” was the inevitable next question, to which came the inevitable answer, “there is no reason whatever.” So we come to the age of non-objective painting in which we live. In this painting not only things and persons have vanished but also every recognizable form, and what remains is an outgushing of the painter’s mood.

Is this a consummation to be wished? It certainly has its advantages. It confers the title of artist on a great many people whose claims might otherwise have passed unnoticed. I am myself almost moronically helpless with brush or pencil, but I have been assured by an art critic that if I began with an experimental daub and went on from there as the spirit moved, I too would produce a work of art. I am afraid the inference I drew was not that I am a mute inglorious artist, but that standards in art are in the course of disappearing. This impression gains color from the ease with which the public and even the critics can be hoodwinked. A London mother, at a venture, sends in an anonymous sketch of a doll made by her eight-year-old daughter, and it is gravely hung in Burlington House among the works of senior masters. Dr. Alva Heinrich of the Psychological Institute of Vienna University arranged an exhibition of thirty pictures, half of which were by such artists as Miró, Donati, and even Picasso, and the other half by schizophrenic patients in a mental hospital; she then gave to 158 persons the task of saying which were which. They were wrong in just about half of their identifications, meaning that they found no grounds for distinction at all. In Paris, on a bet, a landscape by Modigliani valued at $15,000 or more was hung in an exhibition and sale of amateur art, and tagged at $25. Though many of the other pictures were quickly sold, the one picture that was the work of a supposed master had no takers to the end. The American National Academy of Design awarded a prize to a picture which, it was afterward found, had been hanging on its side. When some mirth broke out about this, an indignant connoisseur wrote a letter which I have in my files insisting that what it showed was not the obtuseness of the selecting committee but the real distinction of the picture; only a Philistine would suppose that the sort of meaning which could be affected by a picture’s being on its side or upside down had the slightest importance; the aesthetic value would be precisely the same.

Now I think I see his point. But if we take to be purest and best the art that has left meaning behind and reduced itself to an arabesque or an exclamation, how much, I wonder, will this purified art still have to say to us? The art that flamed from the walls of renaissance Florence and Venice was, I suppose, impure and Philistine art, but it did speak with power to the people who gazed on it. Perhaps the new fashions do too, but if so, they speak to the specialists, not to the many. A few years ago I went in succession to two exhibitions in London, one of recent Russian, the other of recent American, painting. By western standards the Russians were naïve. They ran to such things as life-like portraits of eminent Russians and scenes of peasants bringing in the sheaves. But they spoke simply and pleasingly to all who cared to look. The American exhibition was sophisticated in the extreme. It was entirely non-objective, the only forms in it that were dimly recognizable being a few violently distorted female torsos; for the most part, the canvases were gigantically magnified Rorschach tests. I came away, as others did, with conflicting feelings. Russian art is not free; ours is. But is it quite certain that ours is? May it not be that the new passion for meaninglessness is in itself a tyrannical fashion that, like some new mode in hats or gowns from a Paris designer, is imposing itself for a time and is picked up most eagerly by those without convictions of their own?

A critic could raise parallel questions about much current poetry. There stands out in my memory a remark made to me by the head of a large publishing firm in London: “My firm will look at any sort of manuscript except a book of poetry.” In that he thought the public no longer had any interest. To be sure, that was some ten years ago, and since then an exception seems to have occurred. John Betjeman has scored a remarkable success, so far as number of readers goes—the greatest success, it is said, since Byron. Betjeman is no great poet, but this makes his success the more significant; he must have something that the public is hungry for. What this is, in part at least, seems clear: he believes that verse should sing, and that it should communicate. Most poets of recent decades appear to have abandoned the effort after these ends. The result is that they stammer to each other in corners, out of ear-shot of the world. I think that here again we find a failure in sanity.

But what has poetry to do with the intellect? it may be asked. A. E. Housman said that poetry need have no meaning at all, and that his own test of it was whether, if he repeated it while shaving, he tended to cut himself. His examples of meaningless verse are not very convincing. But in any case, intelligence in art may show itself in more than one way. It may show itself, for example, in the grasp of what is essential to a given art as distinct from what is eccentric in it. Now music lies so near the heart of poetry that its loss is crippling and its deliberate forfeiture stupid. A well-wrought rhythm has an almost physical appeal to us, whether it is the rhythm of a marching band, which, William Lyon Phelps confessed, made him drop everything and go off in pursuit, or the somewhat similar drumbeat of Macaulay’s Lays, or the more delicate measure of one of Housman’s own lyrics; the appeal of this recurrent beat is connected in some obscure way with the very throbbing of our pulse. Having something organic about it, the perfect marriage of sound and sense is usually conceived not by contrivance, but by a welling up from unconscious deeps; Housman has reported of one of his poems that the first two stanzas came to him on a walk on Hampstead Heath; the third came with “a little coaxing after tea”; the fourth required a year, and thirteen deliberate re-writings. The conscious super-ego in these matters cannot replace the dynamic id, but it can supply the blue pencil; it can cultivate the sort of consciousness that makes for craftsmanship.

Now the trouble with contemporary poetry is that its super-ego has gone AWOL. This has left its practitioners without an ear, and therefore without much music in their souls. Many present-day poems, if printed continuously rather than in lines, would give the impression of being peculiarly caco-phonous prose. Robert Frost has written some things that sing; Masefield has written more; but these are survivors of an earlier day. The last poet I can think of whose work was uniformly the product of a fastidious ear was Housman himself, who died in 1936.

With rue my heart is laden

For golden friends I had

For many a rose-lipped maiden

And many a light-foot lad.

By brooks too broad for leaping

The light-foot boys are laid;

The rose-lipped girls are sleeping

In fields where roses fade.

Could anything be simpler than that? It is so simple that only a master craftsman could have done. Just where are the craftsmen who could do it today?

Another thing about it: it is intelligible; though it is pure poetry, no one could fail to catch its meaning at the first exposure. The reader of contemporary verse feels rather like Carlyle who, when listening to the talk of Coleridge, thanked heaven for occasional “sunny islets of the blest and the intelligible.” Having heard a bit of Housman, would you now please listen to this, contributed to one of our most reputable monthlies by one of our most celebrated poets.

I said to my witball

eye, he lid-wise, half hoodwinked, jell

shuttered low,

keep out, keep out what rainfalls

of hindsights or foresights would

rankleroot

house, guts, goods, me

but my eye was a sot

in a bowl of acids, must lidlift to know:

and hailrips had entry, enworlded us, inlet a sea at our cellars,

wracks,

the drag and tow.

My impulse to mutter at this as neither very moving nor very luminous is checked by my memory of having heard a distinguished critic assert that there is an advantage in leaving it uncertain what a poem is about, since it gives the reader his freedom; he can take it as Romeo to Juliet on the balcony, or a portrait of the artist as a young man.

Now when I hear this sort of thing, I also hear what W. H. Cliffford called “a still small voice that murmurs ‘fiddlesticks.’” There is more in poetry than a gnarled vocabulary plus Freudian free association; at that rate anyone can write poetry, which means that poetry has lost its standards and lost its way. It seems to be about as easy to hoax the critics in verse as it is in painting; indeed it has been done over and over again. We have all heard of the two Australian soldiers, Lieutenant MacAuley and Corporal Stewart, who decided one afternoon to invent a new poet named Em Malley, and amused themselves by making a volume of poetry out of tag ends of phrases, some of them thought up by themselves, some culled from the books around them, for example, a U. S. government report on the drainage of breeding grounds of mosquitoes. “The only principle governing the selection was that no two consecutive lines would make sense.” When the book appeared, a leading Australian literary magazine, in a review of thirty pages, said that it “worked through a disciplined and restrained kind of statement into the deepest wells of human experience”; and an American literary quarterly praised it as showing “an unerring feeling for language.” The Wesleyan University Press has recently published a most amusing account of how Witter Bynner and Arthur Davison Ficke invented a new school of poetry. They wrote a set of forty-four nonsense poems and had them printed in a book, called Spectra, by two new poets named Anne Knish and Emanuel Morgan. Edgar Lee Masters wrote that Spectrism was “at the core of things and imagism at the surface”; John Gould Fletcher praised the Spectrists’ “vividly memorable lines”; Eunice Tietjens wrote of the book, “it is a real delight”; Harriet Monroe accepted some of Emanuel Morgan’s poems for publication in her magazine Poetry; Alfred Kreymborg devoted an issue of his critical magazine, Others, to the new spectral school. Bynner and Ficke had some eighteen months of laughter before they revealed that the book and the school were one great spoof. In 1940 James Norman Hall the novelist thought he would too go into the poetry business, and published in Muscatine, Iowa, a book called Oh Millersville, by a certain Fern Gravel. Shortly afterward the New York Times was saying “We have found the lost Sappho of Iowa,” and the grave Boston Transcript intoned, “the book is amazing, amusing, full of the human scene, and not to be missed, because there can’t be another like it in the world.” After six years, Hall told the whole story in the Atlantic in an article, “Fern Gravel: a Hoax and a Confession.”

Poetry, no more than art or theology, can surrender itself to meaninglessness without capitulating at the same time to charlatans. Perhaps Arnold asked too much of it when he said that it should be a criticism of life, for poetry is not philosophy. But after all it can hold ideas—even great ideas—in solution. Most enduring poetry has been an implicit criticism of life, and most men can absorb such criticism more easily from the poets than from the bleak logic of the philosophers. It was of Sophocles, not Socrates, of whom the memorable remark was made that he “saw life steadily and saw it whole.” Though the Canterbury Tales are really tales, and Macbeth and Lear are only plays, and Gray’s Elegy only a lament in a country churchyard, it is hard to see how anyone could read them responsively and not be wiser and better for it. They talk of ageless things, love and ambition and joy and death, which are central in every life; and they do so in a way that makes us accept these things with more understanding and reconciliation. Sanity is not the special preserve of egg-heads; indeed it is apt to elude them and to grant itself to those who engage the world more generously. There is a sanity among values, just as there is a sanity among beliefs, and it lies in the power of bringing a broad experience to bear on each particular point as it arises. It is not cleverness but wisdom, and the wisdom not of the serpent but of Solomon. It does not run to verbal conceits, or adventures in punctuations, or poems that are acrostics. As John Morley said, you cannot make a platitude into a profundity by dressing it up as a conundrum.

What a tirade this has been! It has sounded, I am afraid, like the Jeremiad of an irredeemable old fogey. It is not quite that, though things are now changing at such a pace that we are all likely to be outstripped if we are granted a normal span of life. These recent decades have been impetuously hurrying years, in which we have come a tremendous distance. When I look back to the other side of the divide and recall that in those days a touch of pneumonia or tuberculosis or erysipelas might be a death warrant, that the men who worked in mines or on the railroads did ten or twelve hours a day, that there were few automobiles and no planes, that in the lonely farmhouses there were no radios or television sets to keep them in touch with the world, and that the housewife’s necessities of today—electric refrigerators and washers and vacuum cleaners—were all but unknown, I recognize that our advance in many ways has been enormous.

What then am I saying? I am saying that while this technological giant we have fashioned has been striding forward with seven-league boots, the human spirit has been trotting along behind it, breathless and bewildered. It has lost its sense of direction and of relative importance; it has lost confidence in reason, it has lost those standards of sanity that keep the rational mind on course, and enable it to tell excellence from eccentricity, and distinction from caprice. A hundred years from now men will look back with wonder at eminent philosophers insisting that the business of philosophy is with linguistic usage, at eminent theologians pronouncing reliance on reason to be sin, at eminent moralists reducing moral judgments to boos and hurrahs, at eminent psychologists refusing to call Greek culture better than Polynesian, at eminent artists straining after the meaningless, at eminent poets flocking to the cult of unintelligibility.

But the human mind after all, is a gyroscope that tends in time to right itself, and its past gives us some idea of what righting itself would mean. The main tradition in philosophy is one that runs down from Plato through Hegel to Whitehead, a tradition that has stood for system and sweep of vision—and it will come back. The main tradition in ethics is one that has run from Aristotle through Spinoza to Butler and Green and Bradley; it too will come back. There is a great tradition in criticism that has run from Longinus through Goethe to Arnold and, at his best, Eliot; surely that will revive. These traditions are not really dead; they are only sleeping. This shrill shout of mine is an attempt to wake them up.

* “Mrs. Annie W. Riecker was born in Birkenhead, England. An Arizona Pioneer, she came to Prescott in 1877 with her husband, Paul F. Riecker, an engineer with the U.S. Surveyor General’s Office. Her husband was one of the first to survey the Grand Canyon. He also established a number of country lines in Arizona. The family moved to Tucson in 1880. Mrs. Rieker had four children: Fred, Eugene, Edna, and Eleanor. The Annie W. Riecker Lectureship Foundation was established by her daughter, Mrs. Eleanor Ricker Ritchie, with a $10,000 endowment to the University of Arizona in 1953.” [From the pamphlet’s inside front cover.—A. F.]